TIME: September 2024 – January 2025

COST: from 3.4 million rubles

TEAM

- ML Engineer — Mixtral deployment, tokenization setup, retraining pipelines

Data Engineer / RAG - knowledge base preparation, document cleaning and segmentation, vectorization

Backend developer - API, LangChain bundle with LLM and vector database

Frontend developer — chat widget development, scripting logic, dialog UX

Product / AI analyst — scenario design, intents, testing, assistant training

Technical writer — preparation of references, explanations, structure of concepts, adaptation to the tone

STACK

- LLM: Mixtral 8x7B (в режиме MoE, 2 активные эксперты)

- RAG: LangChain (Python), обвязка логики и сценариев

- Векторная база: Qdrant (в prod) или FAISS (для разработки)

- Обработка текста: SentenceTransformers (all-MiniLM,bge-small-ru)

- API: FastAPI Интерфейс: React-компонент (чат), интегрируемый в текущий фронт

- Данные: PostgreSQL (объекты, пользователи, сценарии), JSONL (FAQ, словарь терминов)

About the client and his goals

The client under the NDA is the owner of a real estate investment platform. Most of the service's customers are private investors who invest in apartments, commercial real estate, and projects with rental or resale income. The team is actively developing the product: it is building up the database of facilities, optimizing analytics and strengthening the channel for attracting newcomers.

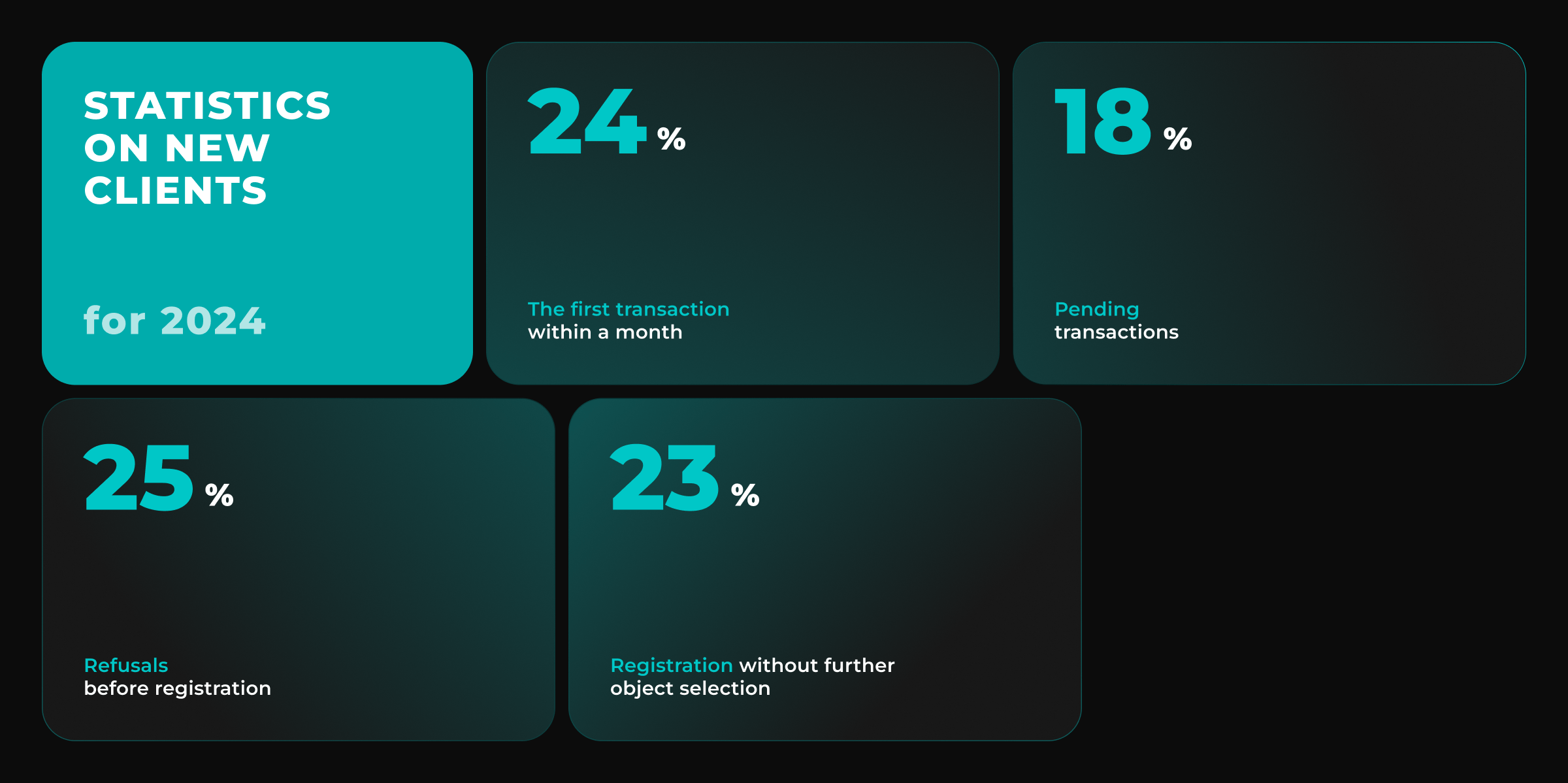

The main problem is the loss of users before the application is processed. According to the results of the behavioral scenarios audit, it became clear:

- Users cannot choose: there are too many objects of the same type, complex parameters (IRR, Cap Rate, NOI), unclear terminology.

- Newbies ask for the same information in support: "what to choose", "what is the difference", "what are the risks".

- 58% of new visits do not even reach the point of saving the site or registering.

- The sales department receives only warm and hot leads, but does not see the behavior of doubting users.

As a result, the idea came up not just to automate the responses, but to create a full-fledged AI navigation assistant so that it:

- he spoke the investor's language

- adapted to the user's level

- he helped me navigate the data without pressure

- and at the same time, it worked completely independently, without dependence on external APIs and without transferring data outside the infrastructure

The goal is to shorten the path from interest to decision, reduce the burden on managers and turn the platform into a convenient tool for making investment decisions.

Task

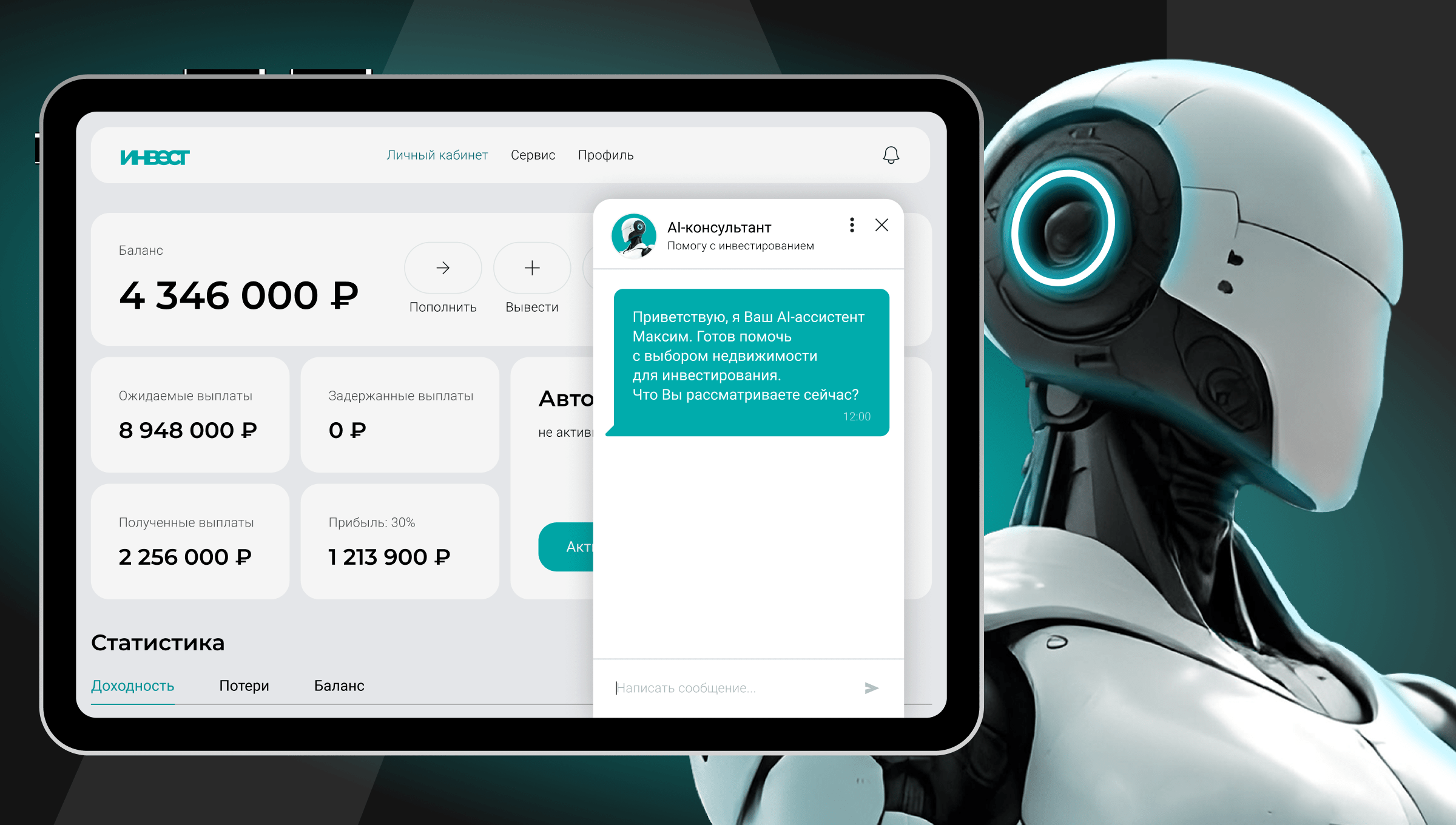

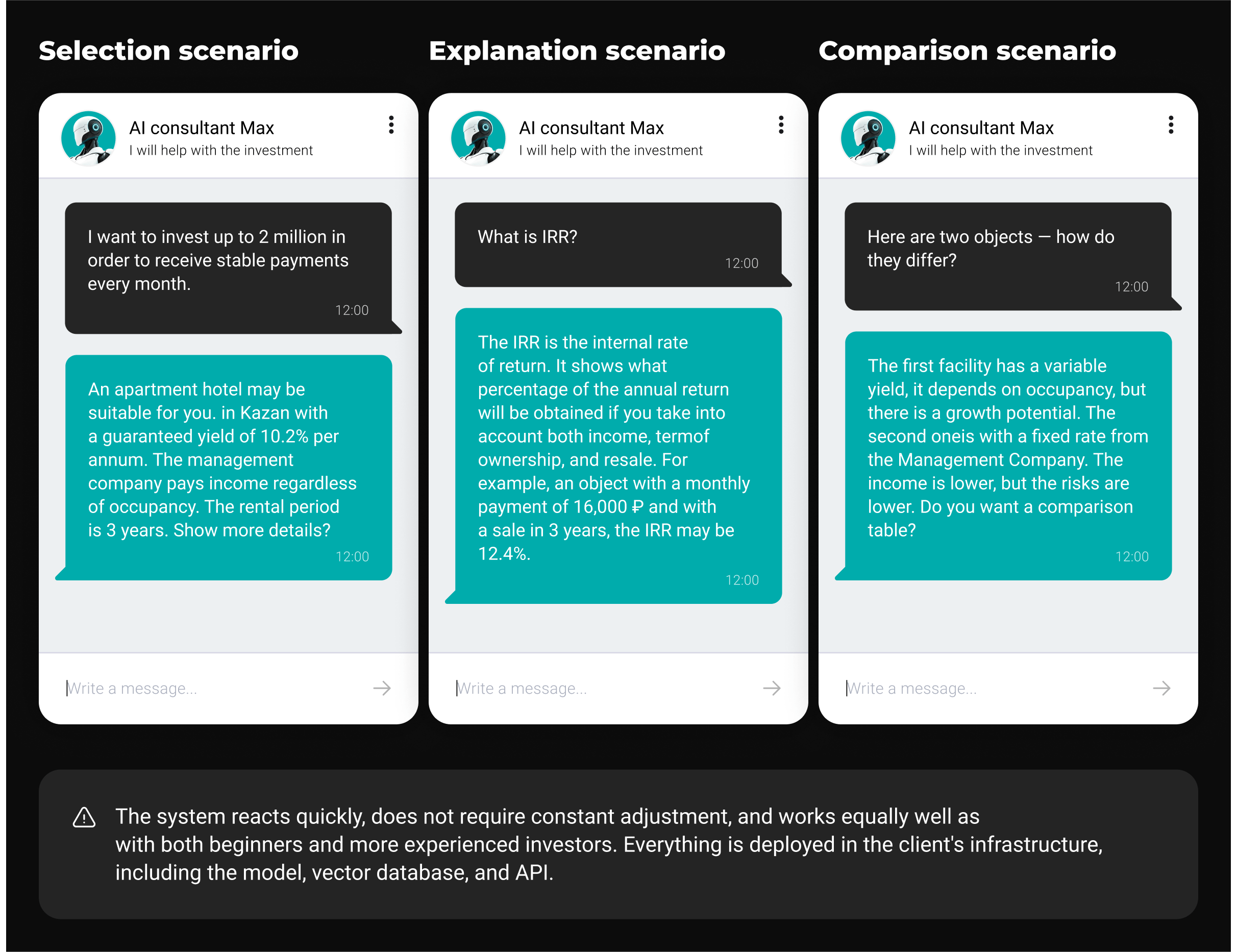

To implement AI inside the platform in the form of an intelligent copilot assistant, which:

helps the user select investment properties based on budget, goals (rental income / capital gains / balanced strategy), investment horizon and risk attitude

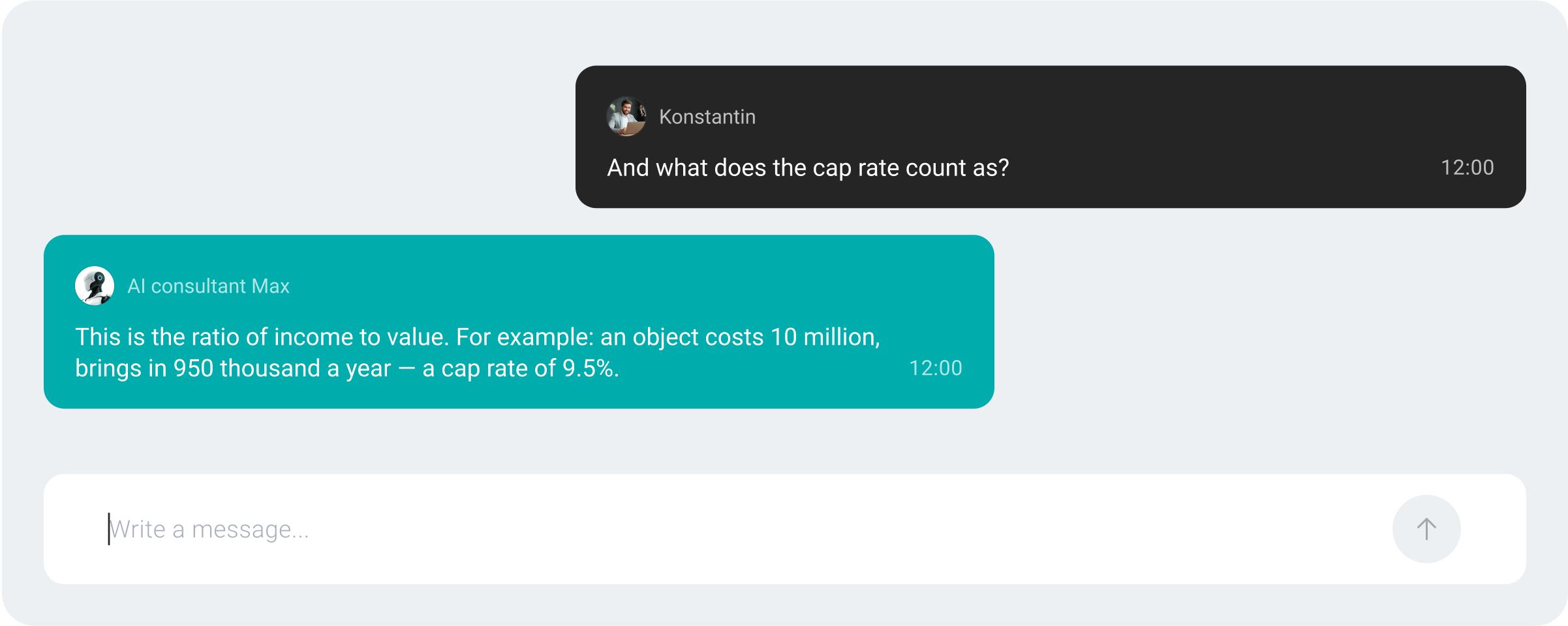

explains financial terms and indicators in a clear language with examples from current facilities

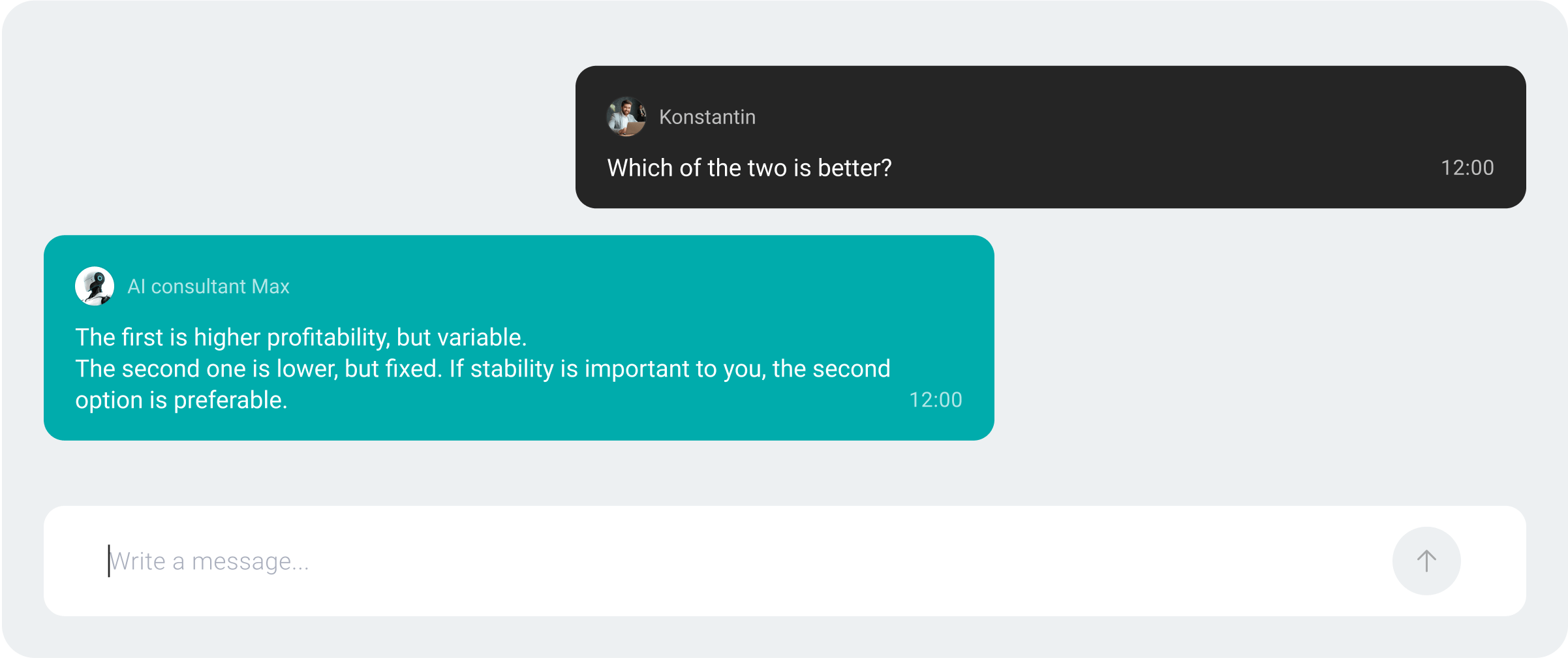

compares the sentences with each other and explains how they differ.

accompanies the user in the dialogue, without losing the context and adapting to the user's level of experience.

It works completely autonomously, without accessing external APIs, in order to meet the requirements for security, data control, and response speed.

What have you done

We have developed an autonomous AI assistant based on Mixtral using LangChain and Retrieval-Augmented Generation (RAG). The assistant works as part of the investment platform: it helps the user to choose a suitable object, explains the terms, compares offers and accompanies all the way from interest to registration of the application.

The solution is deployed in the client's infrastructure and does not depend on external LLMs or APIs. All data is processed and stored locally, which is important to comply with internal security and control requirements.

How it works

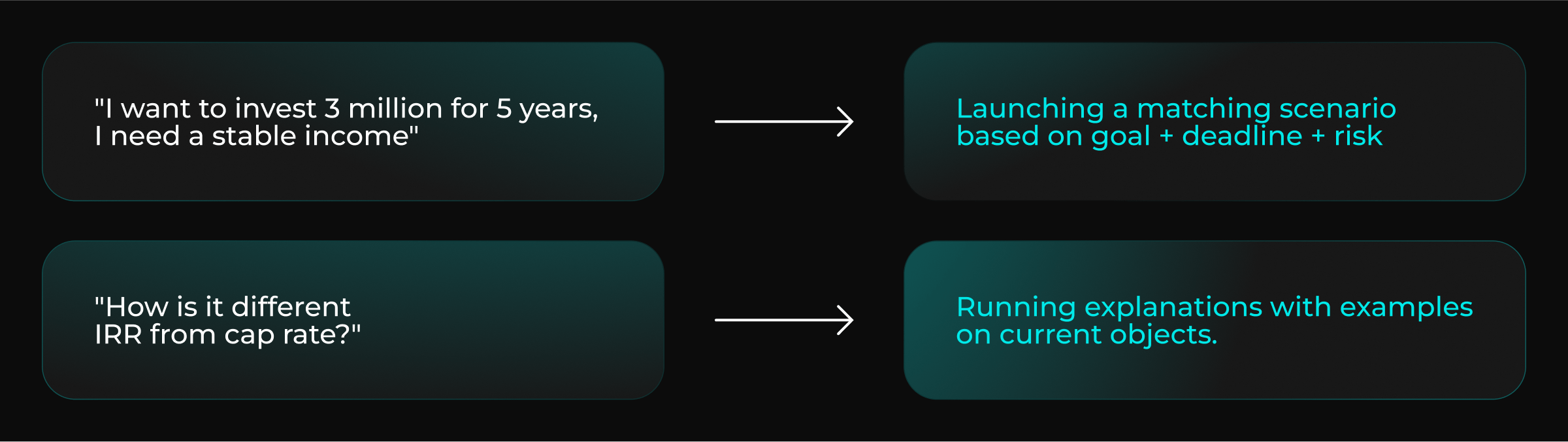

1. Scenario logic based on LangChain

The assistant processes incoming messages, determines the intention (selection, comparison, explanation, clarification) and launches the desired scenario. Chains (ConversationalRetrievalChain) are used with the dialog saved in memory.

2. Knowledge base with a RAG layer

Documents — object descriptions, FAQ, analytics, terms — are structured, segmented, and indexed in Qdrant. With each request from the assistant, relevant fragments are searched for, which are passed to the Mixtral model to generate a response.

We have set up:

- contextual search by object descriptions, profitability parameters, and management companies

- built-in directory of financial and legal terms

- thematic tips: "profitability with management", "guaranteed payments"

3. Mixtral as the generation core

Mixtral 8x7B is used in MoE (Mixture of Experts) mode. This provides an acceptable generation rate on the local server and good quality responses in Russian.

The model is not trained from scratch — we have retrained it on:

- historical user dialogues

- typical requests to managers

- documents from the client's knowledge base

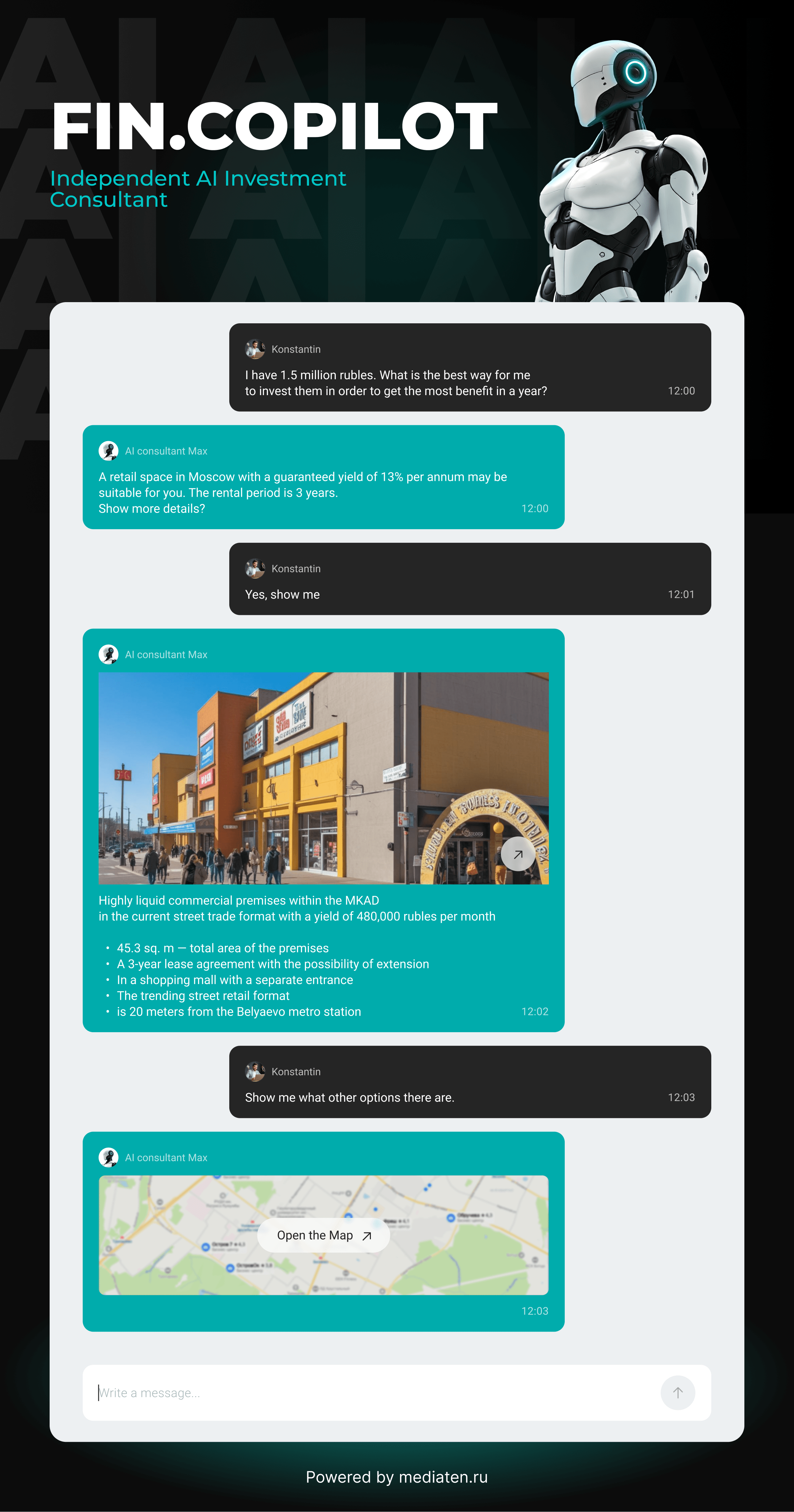

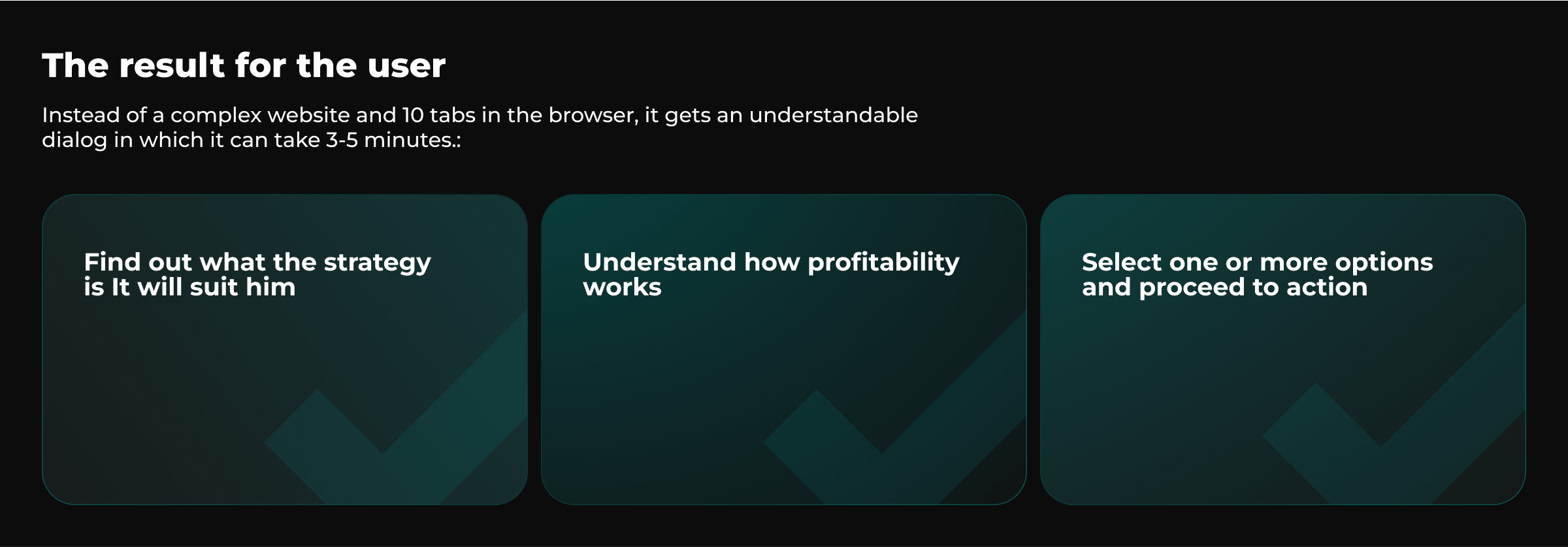

How an AI assistant makes life easier for the user

The AI assistant has become the entry point to the platform for users who had not previously reached the point of choosing an object. It removes congestion, guides you through the funnel, and helps you figure out what's right for a particular person—without calls, PDFs, or complicated calculators.

1. Helps you navigate investment strategies

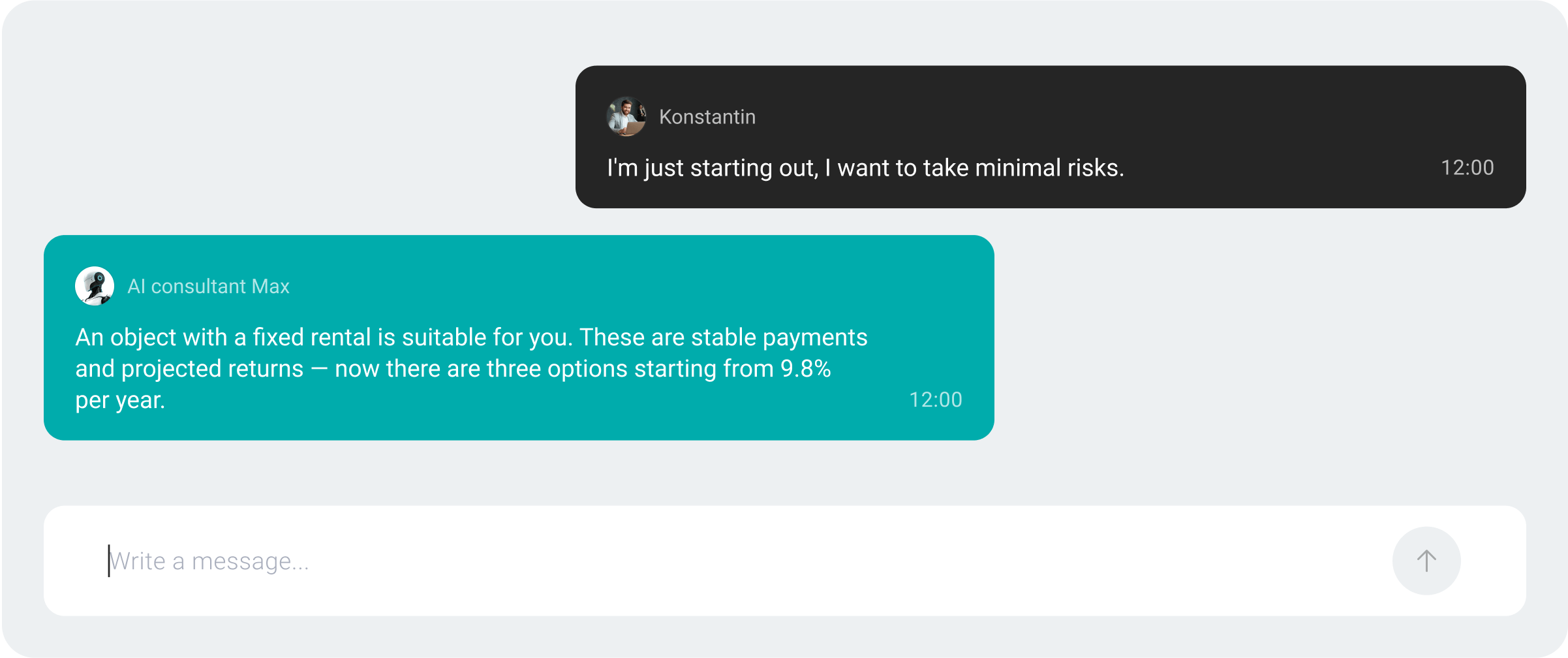

Many users don't know where to start: "where to invest," "for how long," "how the formats differ." The AI assistant asks 2-3 simple questions and suggests suitable strategies.

2. Explains financial terms and metrics

Most of the newcomers are cut off on terms. The assistant translates complex metrics into understandable language, provides examples, and explains calculations for specific objects.

3. Compares objects and explains the difference

The assistant doesn't just give you a list, but explains how the offers differ, where the income is higher and where the risk is lower. This helps you make decisions faster and more consciously.

4. Removes barriers and saves time

The user does not need to study the website, search for FAQ, or make phone calls. The assistant selects, explains and leads to action. In fact, it replaces the first 20 minutes of communication with the manager — but without pressure.

5. It doesn't scare you, it supports you.

The interface and wording are tailored to relieve anxiety. This is especially important for those who make the first contribution. The assistant does not rush, does not impose, does not lead into "bot" turns. Simple and to the point.

Challenges in implementing AI

The project required high accuracy, autonomy, and user trust. We immediately understood that simple text generation would not be enough — the system must explain, not invent, not give too much and adapt to the human level. All this is done without an external API and in a local hosting environment.

1. Control of responses and credibility

The main challenge is to teach the model to respond strictly within the framework of verified data, without "hallucinations".

What have you done

- We built a chain through Retrieval-Augmented Generation: all responses are generated only on the basis of pre-uploaded documents.

- We have introduced restrictions on the length and type of sources that the model can use..

- We have implemented manual filtering: the assistant does not give advice like "put it here", but only explains and shows the options..

2. Simplification of language without loss of meaning

Business terms often sound formal and scare off newcomers..

What have you done

- We have set up explanation templates in simple Russian.

- We have completed the training of Mixtral using examples of "human" formulations.

- We introduced the "detailed response to a request" mechanic: first, briefly, click on the button for a detailed explanation.

3. Technical limitations for local startup

Mixtral is a big model, and it needs resources to work..

What have you done

- We launched the model in MoE (Mixture of Experts) mode — only 2 of the 8 submodels are involved at any given time, which reduces the load..

- Optimized inference via vLLM — fast startup even on a custom GPU infrastructure.

- We trained the client's team to manage the model, restart and update the knowledge base without our help.

4. Learning from your own data

Mixtral did not know the specifics of real estate, and especially the Russian one.

What have you done

- We collected a dataset from real support requests (anonymized).

- Added terms, explanations, sample scenarios, and dialogues..

- We used this case for instructional retraining of the model (supervised fine-tuning) with an investor-oriented approach, not a developer..

Results and development

After launching the production assistant, the client received not just an interface for dialogue, but a new way to work with users in the early stages of the funnel — without overloading managers, without loss of accuracy, and with complete autonomy..

- +21% of the application conversion rate among those who interacted with the assistant (compared to the control group).

- Up to 70% of typical questions are handled by AI — without support.

- The burden on managers decreased by ~40%, and they began to focus more on accompanying "warm" clients, rather than on repeated explanations..

- A new source of insights has appeared within the marketing team: according to the dialogue logs, it became clear which doubts, formulations and goals users most often hear — this directly affected the content, advertising messages and packaging of objects.

How the project is developing

- Personalization based on a user profile: the assistant takes into account past choices, interests, and risk appetite and suggests scenarios tailored to a specific person..

- Expanding the knowledge base: added legal issues, calculation templates, early exit conditions, taxation — all with explanations and based on real data.

- Variable scenarios: the functions of comparing objects, generating a short resume, and preparing for a conversation with a manager are planned, including uploading a PDF at the user's request..

The key effect

AI has become not just a tool for the client, but part of an investment service that makes entering a complex topic easier, and the selection process clearer and more confident.

The system is fully managed within the team: it does not depend on external APIs, does not require complex support, and scales to accommodate the growth of the base, users, and functionality.